Face Recognition using AWS on iOS

Posted by Pranjal And Lakshman on March 3, 2017

Face identification and recognition has been a very tricky topic to handle and work with. There are multiple rich open source libraries such as OpenCV and OpenBR (which is based on OpenCV anyway) that can be used to carry out this task. These libraries involve large amount of effort and have a very steep learning curvey, before they can be used effectively. However, very recently ( November 2016) AWS introduced a service that can be used to detect and recognize objects and faces. The service is called "Rekognition". Amazon Rekognition makes it easy to add image analysis to your applications. With Rekognition, not only faces, you can also detect objects, scenes etc. in images. It also allows you to search and compare faces.

Rekognition's comes with lots of big claims and hence we decided to give this service a try. We initially used the web interface provided by the service to try out some samples and then we tried some code as well. This article it a step by step breakup of the same.

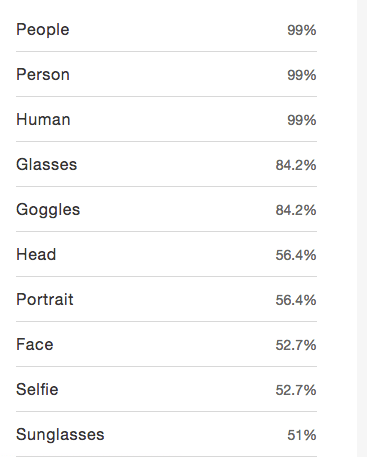

1. First we tried to see if object detection service could identify a face in an image, hence, we tried an image which had human face with goggles on. We were surprised to see that the service was not only able to identify that there was a face in the image but also it was able to give out following information.

So, it was able to detect human face and other objects in the image which in this case was a google, also it made some intelligent observations about the image as well.

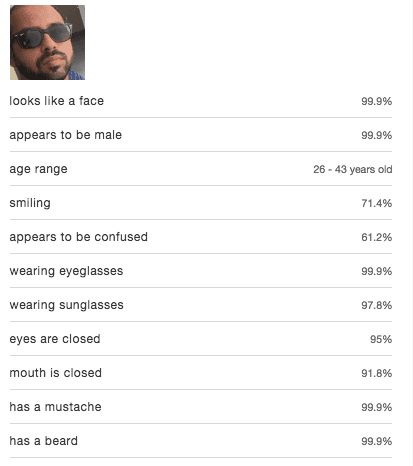

With the same image as earlier one, we decided to go a step further and tried the service to to extract details about the face and we got below results:

This seems really interesting that it is able to provide accurate information such as age, sex, emotion etc.

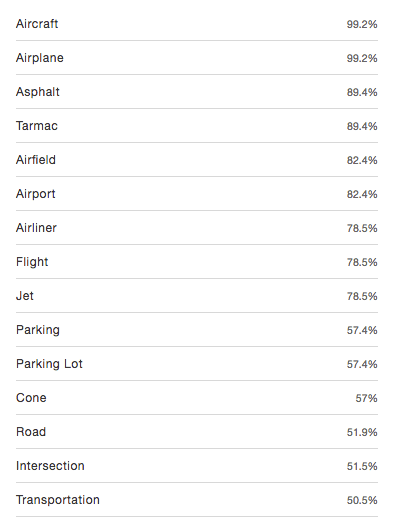

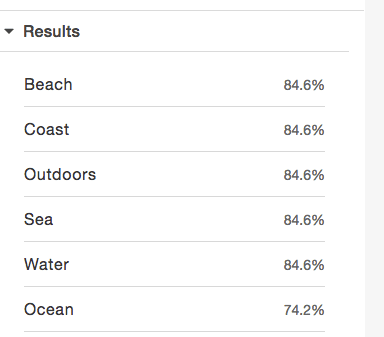

2. Next, we wanted to see if it was able to find out details from a random scene and we carried out following experiments:

Input image:

Results we got were spot on as displayed below.

Next one we thought was harder, but it seems to have made the correct identifications:

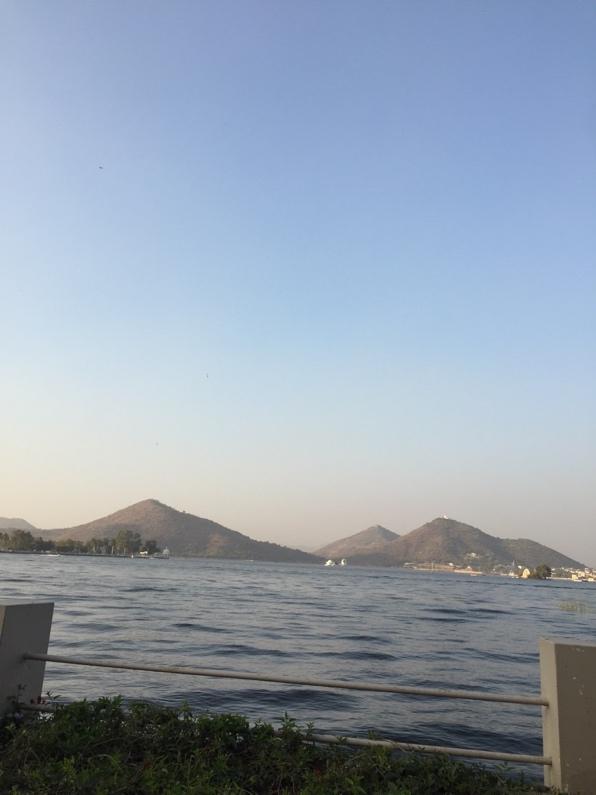

Input:

Results: Though this not an image from beach but a lake but on broad level I think we will give the points for this one as well

So we were convinced that the service seems to be really good at identifying objects and faces in the given image.

A hard core programmer would never be satisfied with just testing the images with interface provided by the service. Hence, that's where we decided to dig deeper. We wanted to play with the API / services exposed by Amazon and got into xcode and started iOS development. Why iOS you may ask, 2 reasons I'd say:

1. AWS does not provide direct examples for iOS code so we wanted to explore how hard or easy it will be to code just based on documentation. Also, as this is a relatively new service, we understood that lots of documentation/ help would not be available on the web, so if we are able to do it successfully we shall be able to help out anyone else who may want to try this and get stuck somewhere. And thats the reason why we published the code that we developed in the process.

2. We had already spent quite a lot of time, about a year back, doing some development using OpenCV on iOS. So this seems logical next step.

How to develop for service on iOS

Assuming you already have AWS account and are aware how to use AWS console (coz if I try to explain that here it will be a complete book and not a blog)

Note: Currently this service only supported in 2 regions and we ran into some issues since we started by using another region (Mumbai)

1. You can download sdk from amazon developers site or install it using cocoapods in your application.

2. Create an IAM User.

3. Add the user to an IAM group with administrative permissions, and grant administrative permissions to the IAM user that you created.

4. On the code side, import AWSRekognition, AWSCore framework and add configuration in AppDelegate.swift file.

let credentialsProvider = AWSStaticCredentialsProvider(accessKey:"Your Access Key", secretKey:"Your Secret Access Key")

let configuration = AWSServiceConfiguration(

region: AWSRegionType.USWest2,

credentialsProvider: credentialsProvider)

AWSServiceManager.default().defaultServiceConfiguration = configuration

5. Create AWSRekognition client.

var rekognitionClient:AWSRekognition!

override func viewDidLoad()

❴

super.viewDidLoad()

rekognitionClient = AWSRekognition.default()

❵

6. Create Collection on AWS. You can create multiple collection for same region.

❴

puts("Unable to initialize AWSRekognitionCreateCollectionRequest.")

return

❵

❴

(response:AWSRekognitionCreateCollectionResponse?, error:Error?) in

❴

print(response!)

❵

7. Store Images to collection for persistence. You can save images into collection directly from s3 bucket or passing bytes of images from application.

❴

puts("Unable to initialize AWSRekognitionindexFaceRequest.")

return

❵

request.detectionAttributes = ["ALL", "DEFAULT"]

request.externalImageId = "image id"

let sourceImage = UIImage(named: "image")

let image = AWSRekognitionImage()

image!.bytes = UIImageJPEGRepresentation(sourceImage!, 0.7)

request.image = image

rekognitionClient.indexFaces(request)

❴

(response:AWSRekognitionIndexFacesResponse?, error:Error?) in

❴

print(response!)

❵

8. Perform face Recognition on stored images in particular collection. Matching faces are returned in an array. If you pass source image with multiple faces, then a face with large bounding box is detected to perform face recognition.

❴

puts("Unable to initialize AWSRekognitionSearchfacerequest.")

return

❵

request.faceMatchThreshold = 75

request.maxFaces = 2

let sourceImage = UIImage(named: "Virat-Kohli-wt20")

let image = AWSRekognitionImage()

image!.bytes = UIImageJPEGRepresentation(sourceImage!, 0.7)

request.image = image

rekognitionClient.searchFaces(byImage:request)

❴

(response:AWSRekognitionSearchFacesByImageResponse?,

error:Error?) in

if error == nil

print(response!)

❵

The process was really straight forward and it seems to work really well. So, if you know your code well and understand how to navigate in AWS infrastructure, this will be straightforward to implement this service.

Related Links:

http://docs.aws.amazon.com/mobile/sdkforios/developerguide

http://docs.aws.amazon.com/rekognition/latest/dg/examples.html

So AWS seems to have opened up amazing possibilities in the field of face / object recognition and this service that can be used in verity of different ways. If you need help related to this feel free to ping us and we will be glad to help you.

About Us:We at CodeFire love to do challenging projects and cutting edge work. If you have some complex project with iOS or AWS or both we would be happy to hear from you and would be more than eager to help you solve it!

Business:

Business:  Call us on:

Call us on: H 92, Ground Floor. Sector 63, Noida, U.P

H 92, Ground Floor. Sector 63, Noida, U.P